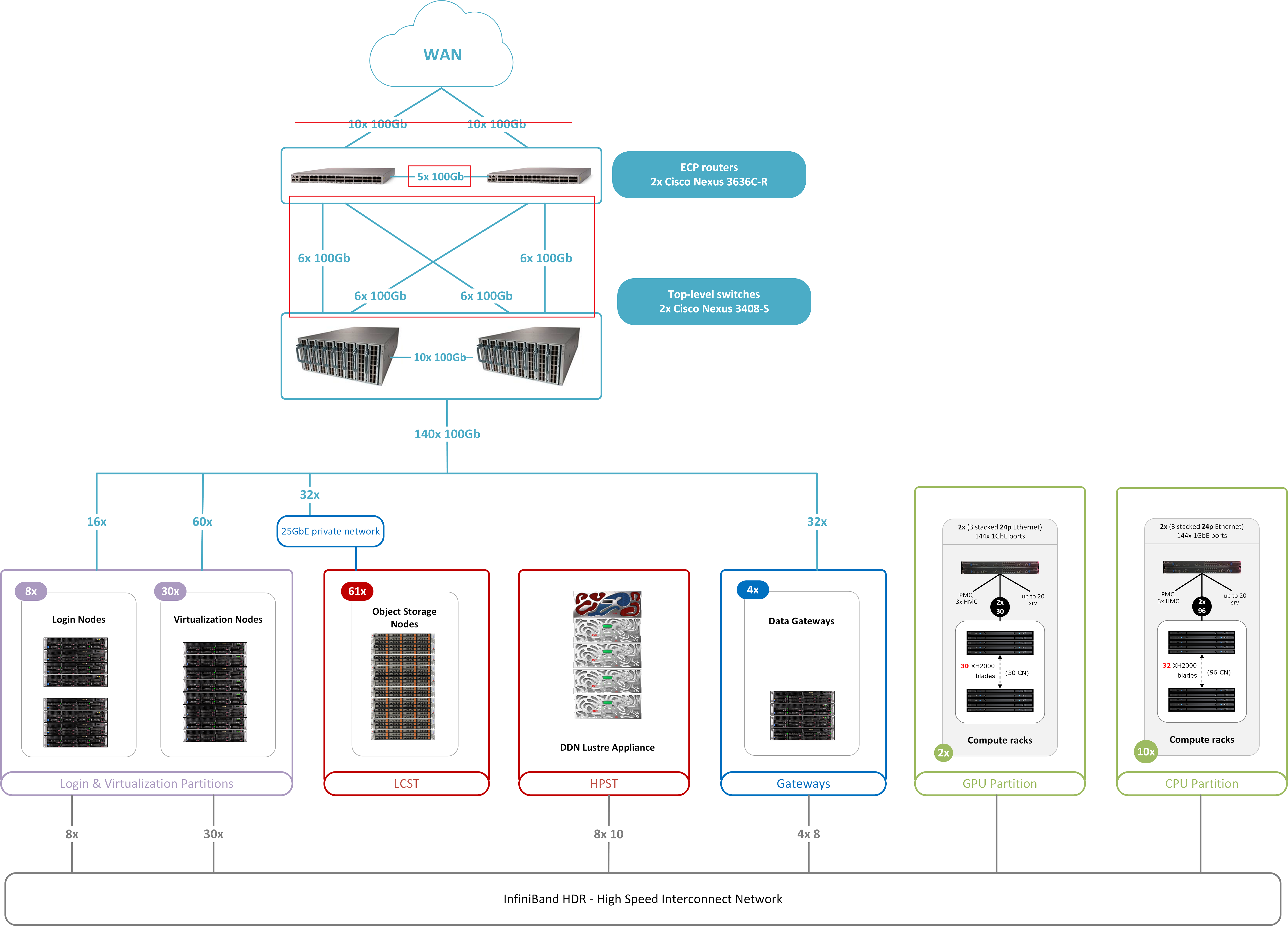

HPC Vega Architecture

Below is a table summarizing the type and quantity of the major hardware components of the proposed solution for the Vega system:

Computing

GPU partition

| Category | Component | Quantity | Description |

|---|---|---|---|

| Infrastructure | Rack | 2 | XH2000 DLC rack with PSUs, HYC and IB HDR switches |

| Compute | GPU node | 60 | 4x Nvidia A100, 2x AMD Rome 7H12, 512 GB RAM, 2x HDR dual port mezzanine, 1x 1.92TB M.2 SSD |

CPU partition

| Category | Component | Quantity | Description |

|---|---|---|---|

| Infrastructure | Rack | 10 | XH2000 DLC rack with PSUs, HYC and IB HDR switches |

| Compute | CPU node Standard | 768 | 256x blades of 3 compute nodes (2x AMD Rome 7H12 (64c, 2.6GHz, 280W) 256GB RAM 1x HDR100 single port mezzanine 1x 1.92TB M.2 SSD) |

| Compute | CPU node Large Memory | 192 | 64x blades of 3 compute nodes (2x AMD Rome (64c, 2.6GHz, 280W) 1TB RAM 1x HDR100 single port mezzanine 1x 1.92TB M.2 SSD) |

Storage

HPST - High-performance storage tier

| Category | Component | Quantity | Description |

|---|---|---|---|

| Storage | Flash-based building block | 10 | 2U ES400NVX (per device: 23x 6.4 TB NVMe, 8 InfiniBand HDR100, 4 embedded Lustre VMs, 1 OST and MDT per VM). |

LCST - Large Capacity Storage tier

| Category | Component | Quantity | Description |

|---|---|---|---|

| Storage | Storage node | 61 | Supermicro SuperStorage 6029P-E1CR24L with 2x Intel Xeon Silver 421R, 12c, 2.4GHz, 100W, 256GB RAM DDR4 RDIMM 2933MT/s, 1x 240GB SSD, 2x 6.4TB NVMe, 24x 16TB HDD, 2x 25GbE Mellanox ConnectX-4 DP, 1x 1GbE IPMI |

| Internal Ceph Network | Ethernet switch | 8 | Mellanox SN2010. Per Switch: 18x 25GbE + 4x 100GbE ports |

Login and Virtualization

| Category | Component | Quantity | Description |

|---|---|---|---|

| CPU login | Login nodes | 4 | Atos BullSequana X430-A5 with 2x AMD EPYC 7H12, 256GB RAM DDR4 3200MT/s, 2x 7.6TB U.2 SSD, 1x 100GbE DP ConnectX5, 1x 100Gb IB HDR ConnectX-6 SP |

| GPU login | Login nodes | 4 | Atos BullSequana X430-A5 with 1x NVIDIA Ampere A100 PCIe GPU and 2x AMD EPYC 7452 (32c, 2.35GHz, 155W), 256GB RAM DDR4 3200MT/s, 2x 7.6TB U.2 SSD, 1x 100GbE DP ConnectX5, 1x 100Gb IB HDR ConnectX-6 SP |

| Service | Virtualization/Service nodes | 30 | Atos BullSequana X430-A5 with 2x AMD EPYC 7502 (32c, 2.5GHZ, 180W) 512GB RAM DDR4 3200MT/s, 2x 7.6TB U.2 SSD, 1x 100GbE DP ConnectX5, 1x 100Gb IB HDR ConnectX-6 SP |

Network and Interconnect Infrastructure

| Category | Component | Quantity | Description |

|---|---|---|---|

| Interconnect Network | IB switch | 68 | 40-port Mellanox HDR swich, Dragonfly+ topology |

| Interconnect Connections | IB HDR100/200 ports on IB card | 1230 | 960 Compute, 60 (x2) GPU, 8 Login, 30 Virtualization, 10 (x8) HCST and 8 (x4) Skyway Gateways with Mellanox ConnectX-6 (single or dual port) |

| IPoIB Gateway | IB/Ethernet Data Gateway | 4 | Mellanox Skyway IB to Ethernet Gateway Appliance (per gateway: 8x IB and 8x 100GbE ports) |

| Ethernet Data Network | Top-Level Switches | 2 | Cisco Nexus N3K – C3408-S, 192 ports 100GE activated |

| WAN Connectivity | IP Routers | 2 | Cisco Nexus N3K – C3636C-R, 5x 100GbE to WAN (provided end of 2021) |

| Top Management Network | 10GbE switch | 2 | Mellanox 2410 switches (per switch 48x 10GbE ports) |

| In/Out of Band Management Network | 1GbE switch | 4 | Mellanox 4610 switches (per switch 48x1GbE + 2x 10GbE ports) |

| Rack Management Network | WELB switch | 24 | Two per rack integrated switches WELB (sWitch Ethernet Leaf Board) with three 24-port Ethernet switch instances and one Ethernet Management Controller (EMC) |

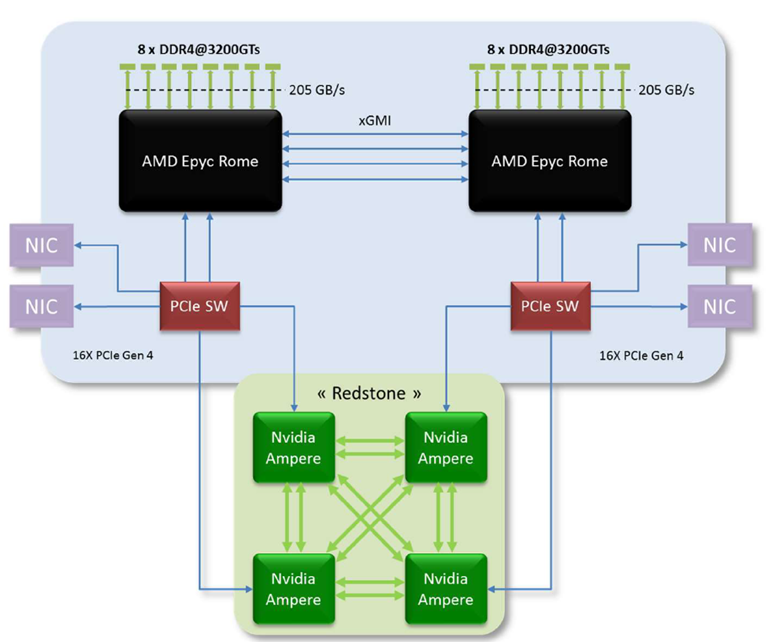

GPU Architecture

GPU Specifications

| NVIDIA Datacenter GPU | NVIDIA A100 |

|---|---|

| GPU codename | GA100 |

| GPU architecture | Ampere |

| Launch date | May 2020 |

| GPU process | TSMC 7nm |

| Die size | 826mm2 |

| Transitor count | 54 bilion |

| FP64 CUDA cores | 3,456 |

| FP32 CUDA cores | 6,912 |

| Tensor cores | 432 |

| Streaming Multiprocessors | 108 |

| Peak FP64 | 9.7 teraflops |

| Peak FP64 Tensor Core | 19.5 teraflos |

| Peak FP32 | 19.5 teraflos |

| Peak FP32 Tensor Core | 156 teraflos/312 teraflops* |

| Peak BFLOAT16 Tensor Core | 312 teraflos/624 teraflops* |

| Peak FP16 Tensor Core | 156 teraflos/624 teraflops* |

| Peak INT8 Tensor Core | 156 teraflos/1,248 teraflops* |

| Peak INT4 Tensor Core | 156 teraflos/2,496 teraflops* |

| Mixed-precision Tensor Core | 156 teraflos/642 teraflops* |

| Max TDP | 400 watts |

NVIDIA System Management Interface

Program could be invoked via the command nvidia-smi, for general options add switch --help.

Multi-Instance GPU (MIG) feature is currently not enabled on HPC Vega.

[root@gn01 ~]# nvidia-smi

Wed Jul 12 11:50:30 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.54.03 Driver Version: 535.54.03 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA A100-SXM4-40GB On | 00000000:03:00.0 Off | 0 |

| N/A 50C P0 140W / 400W | 2584MiB / 40960MiB | 51% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA A100-SXM4-40GB On | 00000000:44:00.0 Off | 0 |

| N/A 43C P0 56W / 400W | 8MiB / 40960MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 2 NVIDIA A100-SXM4-40GB On | 00000000:84:00.0 Off | 0 |

| N/A 44C P0 56W / 400W | 8MiB / 40960MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 3 NVIDIA A100-SXM4-40GB On | 00000000:C4:00.0 Off | 0 |

| N/A 49C P0 83W / 400W | 2818MiB / 40960MiB | 51% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 1503150 C ... 2570MiB |

| 3 N/A N/A 1510286 C ... 2802MiB |

+---------------------------------------------------------------------------------------+

Node topology

Check the node topology on the allocated GPU node with nvidia-smi.

[root@gn01 ~]# nvidia-smi topo -mp

GPU0 GPU1 GPU2 GPU3 NIC0 NIC1 CPU Affinity NUMA Affinity GPU NUMA ID

GPU0 X SYS SYS SYS SYS SYS 48-63,176-191 3 N/A

GPU1 SYS X SYS SYS PIX SYS 16-31,144-159 1 N/A

GPU2 SYS SYS X SYS SYS PIX 112-127,240-255 7 N/A

GPU3 SYS SYS SYS X SYS SYS 80-95,208-223 5 N/A

NIC0 SYS PIX SYS SYS X SYS

NIC1 SYS SYS PIX SYS SYS X

Legend:

X = Self

SYS = Connection traversing PCIe as well as the SMP interconnect between NUMA nodes (e.g., QPI/UPI)

NODE = Connection traversing PCIe as well as the interconnect between PCIe Host Bridges within a NUMA node

PHB = Connection traversing PCIe as well as a PCIe Host Bridge (typically the CPU)

PXB = Connection traversing multiple PCIe bridges (without traversing the PCIe Host Bridge)

PIX = Connection traversing at most a single PCIe bridge

NIC Legend:

NIC0: mlx5_0

NIC1: mlx5_1

Display the NUMA ID of nearest CPU with switch -i, with GPU[0-3] identifier.

[root@gn01 ~]# nvidia-smi topo -C -i 0

NUMA IDs of closest CPU: 3

Display the most direct path for a pair of GPUs.

[root@gn01 ~]# nvidia-smi topo -p -i 0,2

Device 0 is connected to device 2 by way of an SMP interconnect link between NUMA nodes.